Principal Investigator(s): Yelda Turkan

Associate Investigator(s): Michael J. Olsen, Fuxin Li, Roger Chen, Yong Cho

Senior Person(s): Jaehoon Jung, Erzhuo Che, Yeongjin Jang, Marta Maldonado, Jenny Liu, Sumeeta Srinivasan, Gene Roe, Paul Platosh, Aron Morris

Project Starting Year: 2020

Project Ending Year: 2021

Description(Abstract):

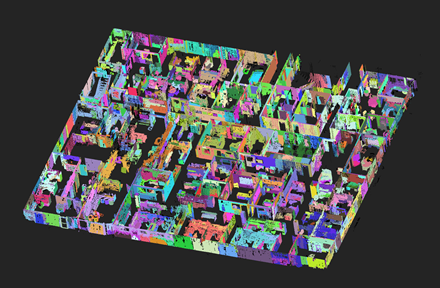

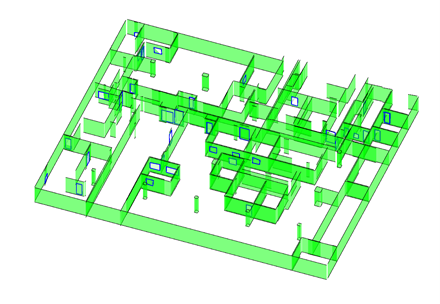

Automation of the process of extracting features and objects from remotely sensed, geospatial data is seen as the Holy Grail by the Built Environment research community and industry. The ability of sensors to acquire increasing amounts of raw geospatial data continues to overwhelm the consumers of that data, not to mention security and privacy concerns. Infrastructure designers and planners increasingly require detailed geospatial databases of the Built Environment in 3D, in order to consider human scale perceptions, which today are often overlooked but critical to improving cities in order to better meet the needs of its citizens and other emerging technologies, such as autonomous vehicles. The impact on society of applying Artificial Intelligence (AI) to rapidly create secure and intelligent three-dimensional (3D) models of the Built Environment, including horizontal (e.g., highway, bridges) and vertical (e.g., buildings, plants) facilities, by turning raw data into actionable information cannot be overstated.

Current workflows and procedures to develop 3D models of the Built Environment require substantial manual effort. Those processes that are automated are limited to small datasets that are not representative of the current point clouds and other data being acquired or needed for Building Information Modeling (BIM). They are also limited in the types of objects that can be modeled. To this end, the interdisciplinary research team proposes novel algorithms to develop a more holistic Scan-to-BIM process that will (1) enable computer vision algorithms and approaches to scale to large datasets (hundreds of millions to billions of points) by exploiting the data acquisition structure and fundamental geomatics principles, (2) extract and classify objects through deep learning processes followed by human interaction, (3) expand the range of types of objects that can be extracted as well as the level of detail of the extraction – notably to include a broader class of objects, (4) perform 3D solid modeling rather than “3D” geometric modeling, (5) determine and populate attributes (e.g., RGB, intensity for material types) for those 3D solid model objects in BIM, and (6) develop effective BIM validation techniques. All of these enhancements will require a substantial amount of fundamental research to explore and identify the most efficient strategies. These novel algorithms will also provide both computational and performance advantages, while they have the potential to become a new mainstream architecture for Convolutional Neural Networks (CNNs) to support a wide range of applications.